14Nov

2016

Eugene / Learning, Stanford Machine Learning / 0 comment

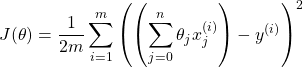

Gradient Descent For Multiple Variables

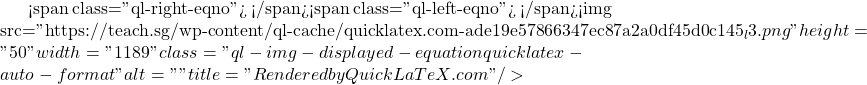

Cost function: ![]()

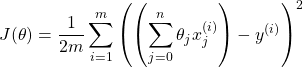

![]()

Gradient descent:

which breaks down into

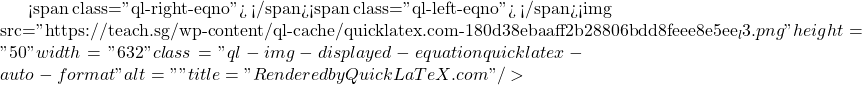

Cost function: ![]()

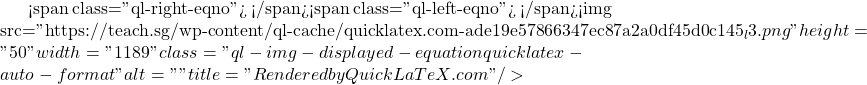

![]()

Gradient descent:

which breaks down into